Governance for AI-Powered Knowledge Systems

Writing AI Agent

∙

Feb 17, 2026

Repetitive questions and scattered knowledge can slow down your team. AI-powered knowledge systems promise faster answers, but without proper governance, they can introduce risks like inaccurate outputs, compliance issues, and security vulnerabilities. Only 43% of organizations have formal AI governance, leaving many exposed to these challenges.

Key Takeaways:

AI systems streamline information access but risk spreading misinformation if not governed effectively.

Common issues include data silos, inaccurate responses, and compliance/security risks.

Governance frameworks should include verified data sources, cross-functional oversight, and detailed audit trails.

Tools like Question Base integrate with platforms like Notion and Salesforce, ensuring verified answers and enterprise-grade security.

With the right governance, AI systems can deliver reliable, secure, and compliant knowledge management. Read on to learn how to build a strong framework and why tools like Question Base are built for Slack-based teams.

Common Governance Challenges in AI Knowledge Systems

Data Silos and Integration Problems

In most enterprises, knowledge is scattered across platforms like Notion, Confluence, Google Drive, Salesforce, and countless others. When AI systems can't pull information from all these locations, the answers they provide are often incomplete. This leads to frustration for employees and wastes valuable time.

Relying solely on Slack chat history creates another layer of inefficiency. While conversations capture context and questions, they don’t replace the verified documentation stored in your repositories. Without a direct link to these trusted sources, AI systems may repeatedly miss critical information, forcing teams to ask the same questions over and over.

To overcome this, organizations need systems that connect directly to verified, up-to-date documentation. This ensures AI responses are grounded in reliable sources rather than fragmented chat logs or outdated personal files. Addressing these silos is a necessary first step toward improving accuracy and reducing bias in AI-generated responses.

Accuracy and Bias in AI Responses

AI systems that rely heavily on conversational context often struggle to distinguish between casual speculation and official policies. This can lead to inaccurate or even misleading responses.

One effective solution is human-in-the-loop verification, where subject matter experts review and approve AI-generated answers before they’re shared as official responses. Additionally, systems that draw from verified documentation significantly lower the risk of spreading outdated or biased information. Transparent audit trails allow users to trace an answer back to its source, making it easier to validate and trust the information provided.

"This is remarkable, especially how you can update the answer to a question by simply replying in Slack! This is a pretty cool way of solving the tough problem of knowledge base being hard to maintain." - Tony Han [1]

Escalation workflows are another key component. When an AI system can't find a reliable answer, it should escalate the query to a human expert rather than guessing. Features like feedback loops, such as thumbs-up or thumbs-down ratings, help knowledge managers identify and correct inaccuracies before they undermine trust within the organization.

Ensuring accuracy is critical, but compliance and security must also be prioritized to maintain trust and meet regulatory demands.

Compliance and Security Risks

AI systems managing internal knowledge must adhere to strict security and regulatory standards. Without robust safeguards, companies risk exposing intellectual property, allowing unauthorized access to confidential data, or violating regulations - any of which could result in hefty fines.

SOC 2 Type II compliance is a gold standard for enterprise security, offering encryption both at rest and in transit. Paired with granular access controls, this ensures employees only access information they’re authorized to see. For organizations handling highly sensitive data, on-premise deployment options provide additional control over where data resides and how it’s processed.

Auditability is equally crucial. Full source tracking and answer history are essential for meeting regulatory requirements. Systems that only offer basic usage stats leave organizations vulnerable during audits, making it difficult to prove compliance with frameworks like GDPR or industry-specific mandates. By embedding these features into their governance, companies can ensure both security and accountability.

Knowledge Management Academy: Now on Now Perspectives - Knowledge Governance and KB Health

How to Build a Governance Framework

AI Knowledge System Governance Framework: 3-Step Implementation Guide

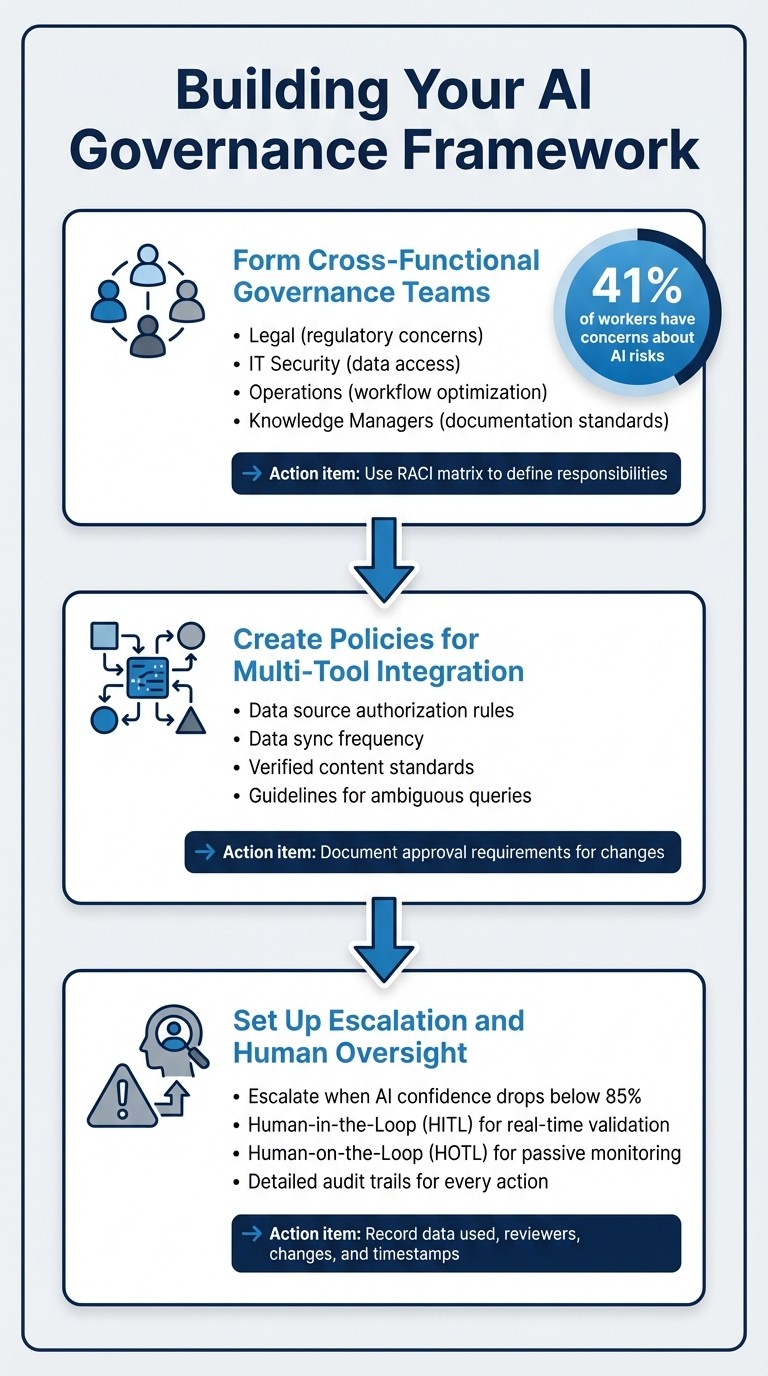

Form Cross-Functional Governance Teams

To establish effective governance, it's essential to bring together teams from Legal, IT, Operations, and frontline roles right from the start. This collaborative effort helps assess risks comprehensively and ensures accountability is clearly defined [2].

Identify key stakeholders who bring expertise from various angles of AI deployment. For instance, compliance officers can address regulatory concerns, IT security teams can handle data access, operations managers can streamline workflows, and knowledge managers can uphold documentation standards. Using a RACI matrix (Responsible, Accountable, Consulted, Informed) can clarify decision-making responsibilities and keep everyone aligned.

"Beginning with cross-functional oversight: Bring Legal, IT, Ops, and frontline teams together from the start to ensure comprehensive risk assessment." – Slack [2]

This approach is crucial, as 41% of workers have expressed concerns about risks tied to AI, particularly around privacy, copyright, and liability [2].

Once the roles are clearly defined, the focus should shift to creating integration policies that span across tools.

Create Policies for Multi-Tool Integration

Developing clear policies for integrating various tools is a key step. This includes defining rules for data source authorization, determining how often data syncs, and configuring integration settings. Establish verified content standards and create guidelines for handling ambiguous queries, such as addressing tone and acknowledging uncertainty.

Document which teams have the authority to adjust these settings and require approvals before implementing any changes. This ensures that responses remain consistent and align with the organization's communication standards.

After setting these policies, it's important to establish robust escalation mechanisms.

Set Up Escalation and Human Oversight

Build escalation workflows to ensure human intervention when AI confidence drops below 85% or when sensitive issues arise [3] [5]. Provide full context - such as prior interactions, AI analysis, and confidence scores - to avoid unnecessary backtracking [3] [5].

Differentiate between real-time validation (Human-in-the-Loop or HITL) and passive monitoring (Human-on-the-Loop or HOTL) [7].

"By maintaining human oversight, organizations can effectively spot discrepancies and address them before they escalate into larger issues." – Tines [4]

Maintain detailed audit trails for every AI-assisted action. Record the data used, who reviewed the output, any changes made, and when the review occurred [6]. This not only ensures accountability but also supports compliance during audits.

Monitoring, Auditing, and Improvement

Keep an Eye on Analytics and Performance Metrics

Effective governance doesn’t stop at setting policies - it thrives on continuous oversight through analytics and audits. Regularly measuring performance is key to ensuring your AI operates effectively. For instance, tracking resolution rates helps you see how often the AI resolves questions without needing human help. Many AI systems can automate over 90% of FAQs, a benchmark worth aiming for [1].

Pay close attention to unanswered queries and low-confidence responses. These are the areas where your system may struggle and where human intervention becomes necessary. While basic Slack AI usage stats might provide some insights, Question Base goes further by offering detailed analytics on resolution rates, automation performance, and content gaps. These insights not only guide improvement but also tie directly into compliance-focused audit trails.

Ensure Audit Trails and Compliance Transparency

For compliance and accountability, every AI interaction needs to be traceable. This means documenting the data sources the AI accessed, the answers it provided, and whether a human reviewed or adjusted those responses. Such records are indispensable during regulatory reviews or internal quality checks.

"The expert verification process gives us confidence that every answer meets our compliance standards." – Question Base [1]

Question Base simplifies this process by automatically generating comprehensive audit trails as part of its enterprise-grade compliance framework. Built to support SOC 2 Type II standards, these trails not only ensure transparency but also help organizations stay ahead of regulatory changes. Beyond compliance, these records serve as a foundation for refining knowledge management strategies.

Close Knowledge Gaps and Adapt to Evolving Needs

Analytics are a powerful tool for identifying unresolved queries and pinpointing content gaps caused by outdated or missing documentation. If the same unanswered questions keep cropping up, it’s a clear sign that your documentation needs attention. Regularly revisiting these patterns ensures your knowledge base stays relevant.

Question Base takes this a step further with features like gap analysis and duplicate detection, helping knowledge managers identify where documentation is falling short. By tracking unanswered questions and spotting recurring patterns across teams and channels, the platform shifts your approach from reactive problem-solving to proactive knowledge management. This ensures your AI system grows and adapts alongside your organization’s changing needs.

How Question Base Supports AI Governance in Slack

Verified Answers from Trusted Sources

For teams relying on Slack as their main communication hub, Question Base ensures that responses come from authenticated, reliable sources rather than casual conversations. Unlike Slack AI, which pulls information from previous chat messages, Question Base connects directly to verified documentation stored in platforms like Notion, Confluence, Salesforce, Google Drive, and more.

The platform reinforces security by adhering to connected-tool permissions, ensuring employees only access information they’re authorized to see. Answers are reviewed and validated by subject matter experts before being added, creating a human-in-the-loop process that scales accuracy while maintaining control.

"Question Base has become our single source of truth. The expert verification process gives us confidence that every answer meets our compliance standards." – Question Base [1]

Security is equally important - and Question Base’s compliance features ensure your data remains safeguarded every step of the way.

Enterprise-Grade Security and Compliance

Question Base is designed with enterprise-level security in mind. It meets SOC 2 Type II standards, encrypting data both at rest and in transit. For organizations needing extra control, an optional on-premise deployment is available. Detailed audit trails log every access event, answer history, and content change, offering critical transparency for compliance reporting and security investigations. With a 99.9% uptime SLA [1], Question Base provides the reliability enterprises require.

The platform also integrates seamlessly with internal security policies through role-based access controls and approval workflows, ensuring users only access authorized information while keeping compliance teams informed at all times.

Customizable AI Behavior and Escalation Flows

Beyond verified data and robust security, Question Base offers granular control over AI behavior. Teams can customize AI tone, behavior, and escalation processes. When the system encounters low-confidence scenarios, it flags the gap and automatically escalates the query to experts. This approach helps teams achieve over a 90% automation rate, saving experts more than 6 hours per week [1].

For larger enterprises, Question Base supports multi-workspace management and white-labeling, ensuring consistent knowledge governance across multiple Slack workspaces or Enterprise Grid setups. This level of customization keeps experts in the loop, ensures accountability, and strengthens governance across the board.

Conclusion

AI-powered knowledge systems can significantly enhance productivity, but without a solid governance framework, they can introduce risks to accuracy, compliance, and security. Establishing a structured approach - encompassing cross-functional collaboration, clear policies, human oversight, and continuous monitoring - is critical to ensuring these tools align with organizational goals while maintaining trust and meeting regulatory requirements. This balance is key to safeguarding both operational effectiveness and compliance.

For teams relying on Slack as their primary workspace, Question Base offers a tailored solution to tackle governance challenges effectively. By connecting to verified sources like Notion, Confluence, and Salesforce, it ensures that responses are based on trusted, expert-approved knowledge rather than unstructured chat logs. With enterprise-level security and customizable oversight features, Question Base meets the high accountability standards required by modern enterprises.

The impact is clear: organizations using Question Base report over 90% automation rates for repetitive questions, saving experts more than 6 hours per week [1]. Beyond the numbers, it empowers teams to maintain control over AI behavior, keep compliance teams informed, and create a dynamic knowledge base that adapts to their evolving needs. This seamless integration of reliable documentation and robust governance practices reflects the framework discussed here.

For organizations ready to prioritize AI governance and transform Slack into a secure, compliant knowledge assistant, Question Base delivers the enterprise-grade infrastructure and flexibility needed from the very start.

FAQs

What should an AI knowledge governance policy include?

An effective AI knowledge governance policy begins with ensuring data quality and accuracy. This means relying on trusted sources such as Confluence or Salesforce to minimize the risk of outdated or incorrect information making its way into responses.

The policy should also emphasize security and compliance. This includes implementing role-based access controls, encrypting sensitive data, and adhering to established standards like SOC 2 Type II to safeguard information.

Equally important are transparency, human oversight, and regular audits. These practices help maintain trust, keep AI performance in check, and address potential biases or errors with precision and accountability.

How do we prevent the AI from providing unverified information?

To deliver verified answers, platforms like Question Base prioritize information from trusted, expert-approved sources such as Notion, Confluence, and Salesforce, instead of relying solely on chat history. This method ensures both accuracy and auditability. Complementing this, practices like regular content audits, role-based access controls, and human oversight further safeguard the quality of responses. With Question Base, you gain tools to manage content effectively and escalate complex queries to human experts when necessary, ensuring reliable support.

What logs are required for security and compliance audits?

To meet security and compliance audit requirements, it’s crucial to maintain detailed records of access permissions, user activity, data access, and audit trails. When working with Slack integrations, ensure that role-based access control (RBAC) is properly implemented to manage who can access what information.

Additionally, pay close attention to how data is shared with external tools like Question Base, Notion, and Confluence. Comprehensive logging should capture every interaction - this includes who accessed data, what actions were taken, and when they occurred. These logs not only help with audits but also provide a clear trail for accountability and troubleshooting.